AB Testing

Jan 22, 2026

Does A/B Testing Hurt Your Meta Ads?

Does A/B testing hurt your Meta Ads performance? How to investigate CPC spikes during tests.

You launched an A/B test. Your CPCs spiked. You killed the test.

It's a pattern we see all the time. Something changed, something got worse, so the change must be the cause.

But correlation isn't causation. And before you blame your test, you need to actually investigate.

Start With Your Own Historical Data

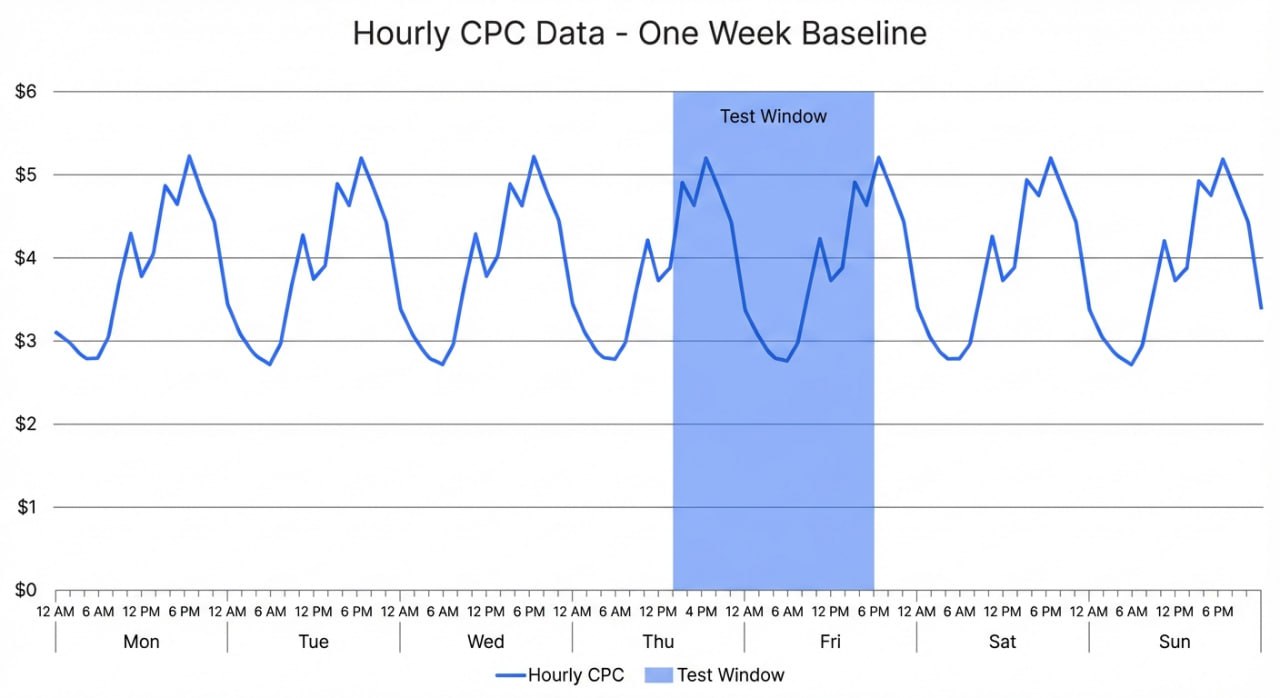

CPCs are volatile. They move based on time of day, day of week, competition, seasonality, and factors you'll never fully understand. What looks like a spike might just be normal variation for your account.

Pull your hourly CPC data for the week or two before your test. Look for patterns. Are there certain hours where you consistently see higher costs? Days that run hotter than others?

One merchant we worked with was convinced their test caused a CPC spike. When we pulled their historical data, we found their CPCs followed a consistent pattern that had nothing to do with the test. The "spike" they saw during the test matched what their account had been doing all along during those same hours.

The test ended. CPCs stayed elevated for a few more hours. That wasn't the test lingering... it was just their normal pattern continuing.

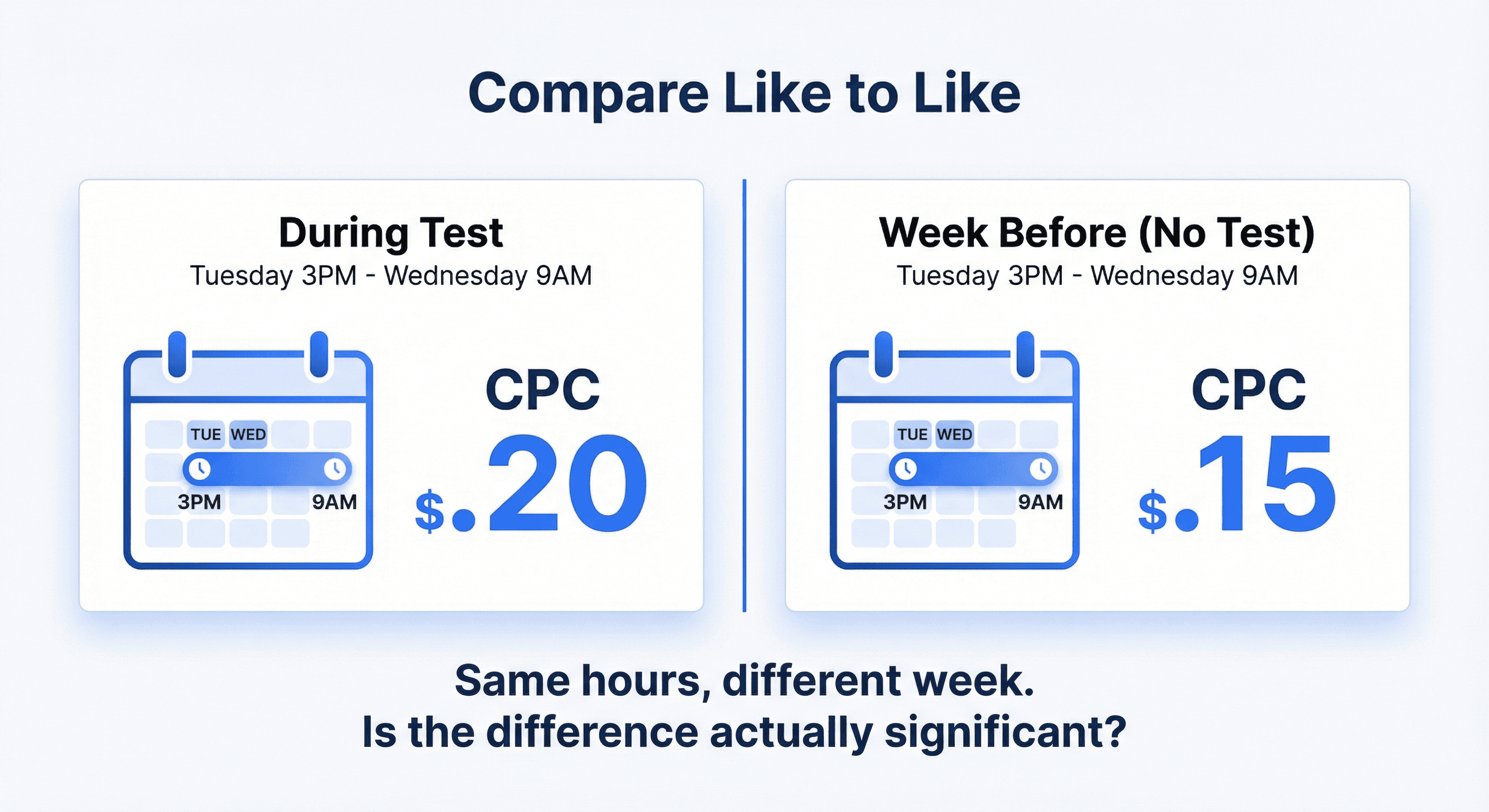

Compare Like to Like

If your test ran Tuesday afternoon through Wednesday morning, compare that to the previous Tuesday afternoon through Wednesday morning. Same hours, different week. What do you see?

This is the only way to know if what you're seeing is actually anomalous. A CPC of $4 might feel high, but if you were hitting $4 at the same time last week without any test running, the test isn't your problem.

Check What Happened During the Test, Not Just When It Started

Here's a question worth asking: did CPCs stay elevated the entire time the test was live?

That same merchant ran two identical tests at different times. During one, CPCs rose. During the other, CPCs actually fell while impressions and clicks increased.

If a test were truly hurting your Meta performance, you'd expect consistent degradation whenever it's live. If you see issues during one test window but not another, you're probably looking at normal variation, not test interference.

Are you looking for certainty or confidence? Understanding when to trust your test results and when to dig deeper.

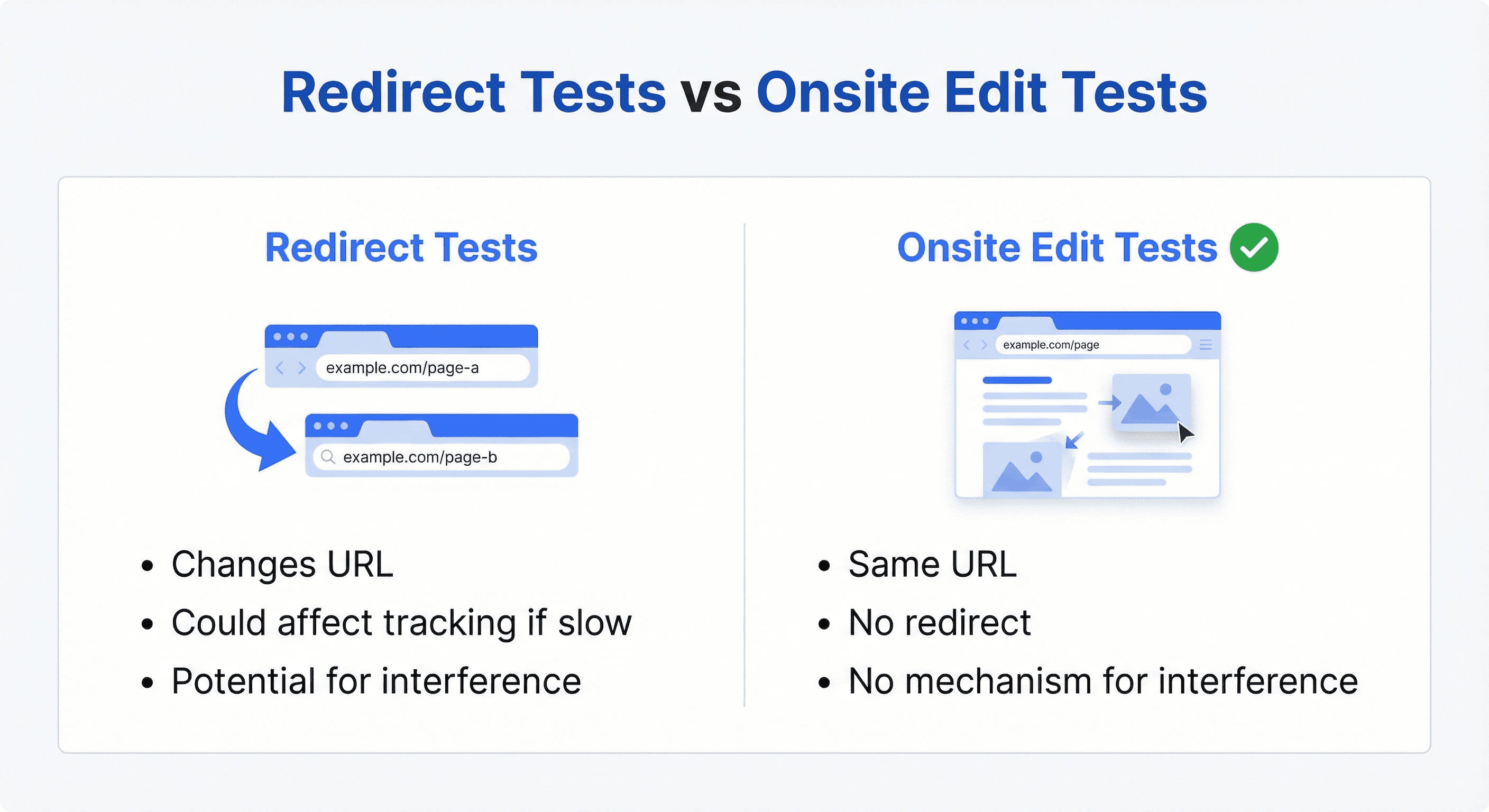

The Type of Test Matters

Not all A/B tests work the same way. The difference matters here.

Redirect tests send visitors to entirely different URLs. In theory, these could affect Meta's conversion tracking if something goes wrong with the redirect. A slow redirect might look like a bounce to Meta, which could hurt your quality scores.

Onsite edit tests change elements on your existing pages without changing the URL. There's no redirect, no URL change, nothing that would signal to Meta that anything is different. The mechanism for interference just isn't there.

If you're running onsite edits and seeing CPC issues, the test probably isn't your culprit. The technical pathway for interference doesn't exist.

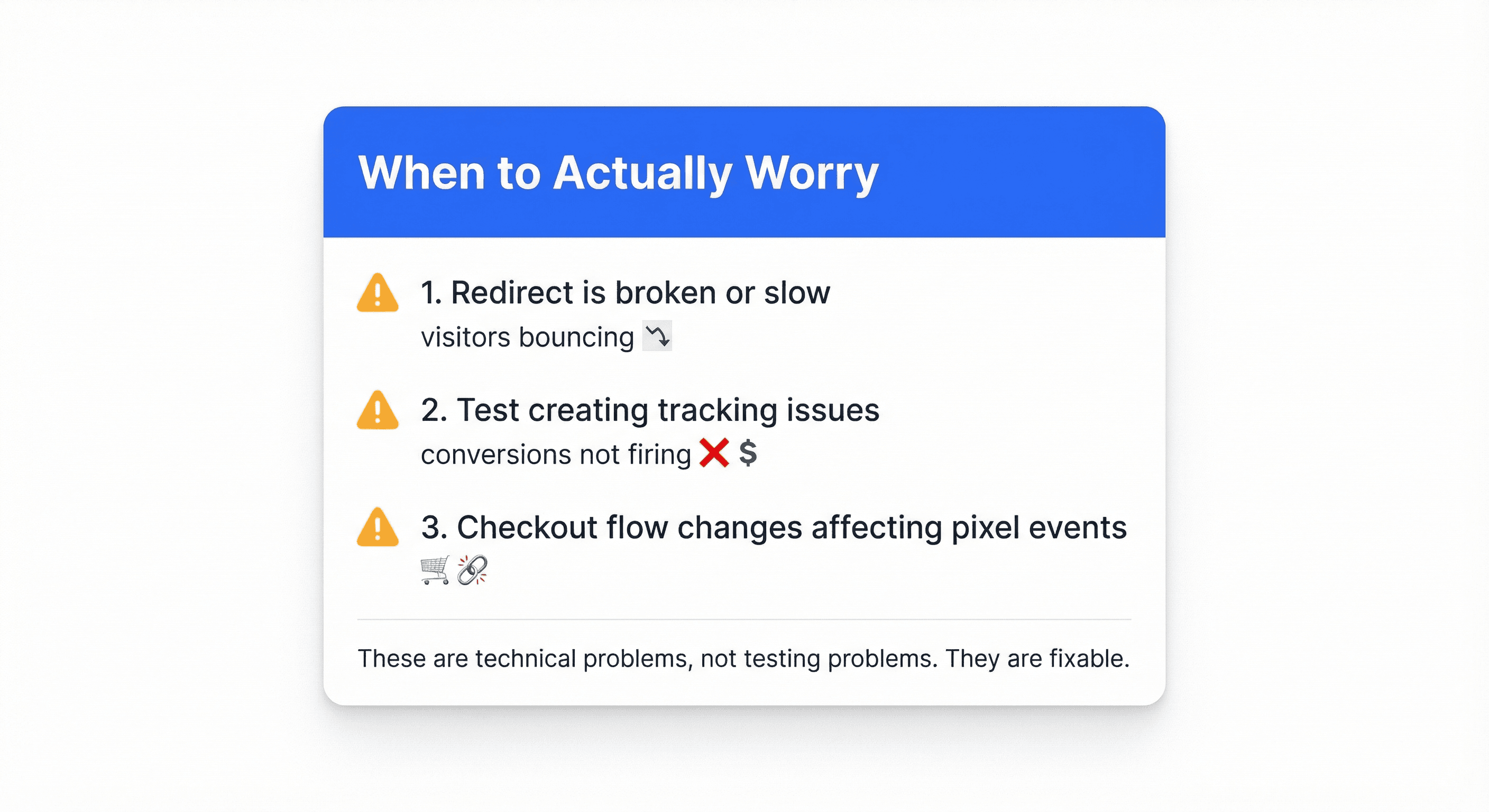

When to Actually Worry

There are scenarios where a test could legitimately interfere with Meta. Watch for these:

Your redirect is broken or slow. If visitors are bouncing because the redirect takes too long, that's a real signal to Meta.

Your test is creating tracking issues. If conversions aren't firing correctly in one variant, Meta's optimization will suffer. Check your experiment analytics to verify conversions are tracking properly.

You're testing something that changes your checkout flow in a way that affects pixel events.

These are technical problems, not inherent issues with testing. They're fixable. And they're diagnosable... you'd see them in your conversion tracking data, not just your CPCs.

The Bottom Line

A/B testing doesn't inherently hurt your Meta performance. But Meta performance is noisy, and tests give you a convenient thing to blame when numbers move in the wrong direction.

Before you kill a test, do the work. Pull your historical data. Compare the same hours from previous weeks. Check whether the pattern holds across multiple test windows.

You might find that the "problem" you're seeing was happening all along.

AB Testing

Ecommerce Strategy

Analytics